Algorithms For Computer Algebra Pdfs

As a hobby, I have written a basic computer algebra system. My CAS handles expressions as trees. I have advanced it to the point where it can simplify expressions symbolically (i.e., sin(pi/2) returns 1), and all expressions can be reduced to a canonical form.

Develop algorithms Algebraic algorithms Symbolic-numeric algorithms gcd(x + 1, x^2 + 2.01.x +.99)! Study how to build computer algebra systems Memory management Higher-order type systems Optimizing compilers! Mathematical knowledge management Representation of mathematical objects. Algorithms for computer algebra, by Keith O. Geddes, Stephen R. Czapor and George Labahn. ISBN 0-7923-9259-0 (Kluwer Academic Publishers) - Volume 79 Issue 484 - Alistair Fitt.

Introduction To Computer Algorithms Pdf

It can also do differentiation.Using this paradigm, what kinds of algorithms are there for solving algebraic equations? An equation in my model would be represented as an (=) tree with two subtrees that are the left and right expressions. I know there is no 'magic bullet' for solving all equations, but are there algorithms out there that are designed to symbolically solve an equation? If there aren't, what would be the general approach? What kind of classes can equations be split into (so that I might be able to implement an algorithm for each kind)? I don't want to use naive methods and then paint myself into a metaphorical corner. That does not answer really the question, but I don't think that computer algebra is really about solving equations.

For most kind of equations I can think about (polynomial equations, ordinary differential equations, etc), a closed-form solution using predefined primitives usually does not exist, and when it does it is less useful than the equation itself. For example, consider univariate polynomial equations. It is not always possible to solve these equations using nth-roots, and when it is, it is smarter to represent a solution of such an equation by the equation itself.

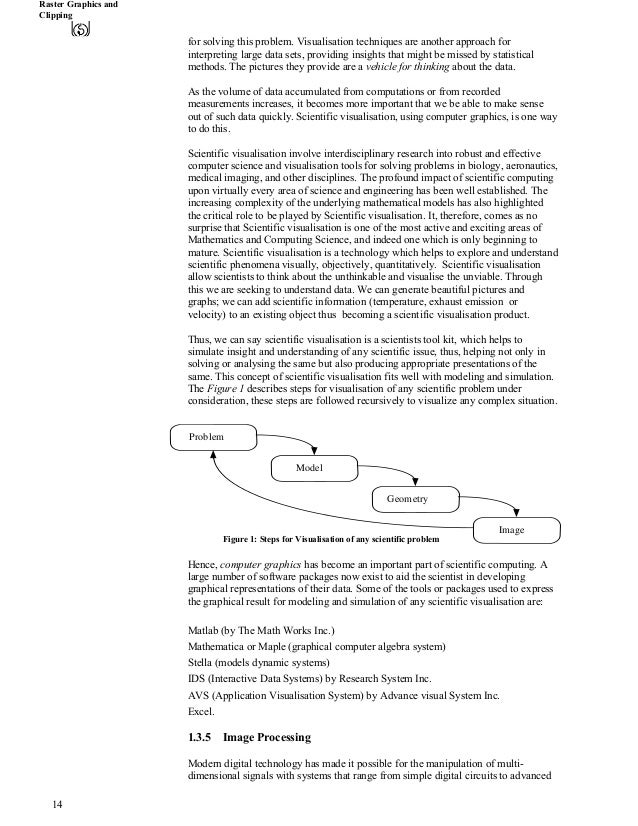

With this representation you can perform addition and multiplication easily.I my opinion, computer algebra is more about manipulating the equations themselves.For example, you have two univariate polynomial equations, virtually defining two algebraic numbers, and you want to compute the equation satisfied by the sum of these two numbers. Most of time you want the equality to be decidable.You have to delimit carefully the class of objects you are working with because if the class is too wide the equality decision problem may quickly become unsolvable, seeOf course there is numerous situations in computer algebra where one want to solve an equation.Of fundamental importance, there are linear equations, they are ubiquitous.I think also of polynomial system. In the domain of ordinary differential equations, I think of rational resolution: given an ODE, you want to find all rational solutions; or power series resolution.To answer more precisely your question, I think you should implement first the resolution of linear system of equations. You may use Gauss' elimination. When efficiency will become an issue, you may have a look at Bareiss' algorithm. After that there is a whole bunch of various and specialised algorithms to consider.After that, all depends on what you are interested in. You could implement algebraic numbers, polynomial factorization.

You might also be interested in numerical computation, fancy linear algebra, arbitrary precision computation, etc.